Jonathan Downie made an interesting comment on his twitter this morning.

Interpreting will never be respected as a profession while its practitioners cling to the idea that they are invisible conduits.

Several things occurred to me about this and in no particular order, I’m going to dump them out here (and then write in a little more detail how I feel about respect/interpreting)

- Some time ago I read a piece on the language industry and how much money it generated. The more I read it, the more I realised that there was little to no money in providing language skills; the money concentrated itself in brokering those skills. In agencies who buy and sell services rather than people who actually carry out the tasks. This is not unusual. Ask the average pop musician how much money they make out of their activities and then check with their record company.

- As particular activities become more heavily populated with women, the salary potential for those activities drops.

- Computers and technology.

Even if you dealt with 1 and 2 – and I am not sure how you would, one of the biggest problems that people providing language services now have is the existence of free online translation services and, for the purposes of interpreters, coupled with the ongoing confusion between translation and interpreting, the existence Google Translate and MS’s Skype Translate will continue to undermine the profession.

However, the problem is much wider than that. There are elements of the technology sector who want lots of money for technology, but want the content that makes that technology salable for free. Wikipedia is generated by volunteers. Facebook runs automated translation and requests correction from users. Duolingo’s content is generated by volunteers and their product is not language learning, it is their language learning platform. In return, they expect translation to be carried out.

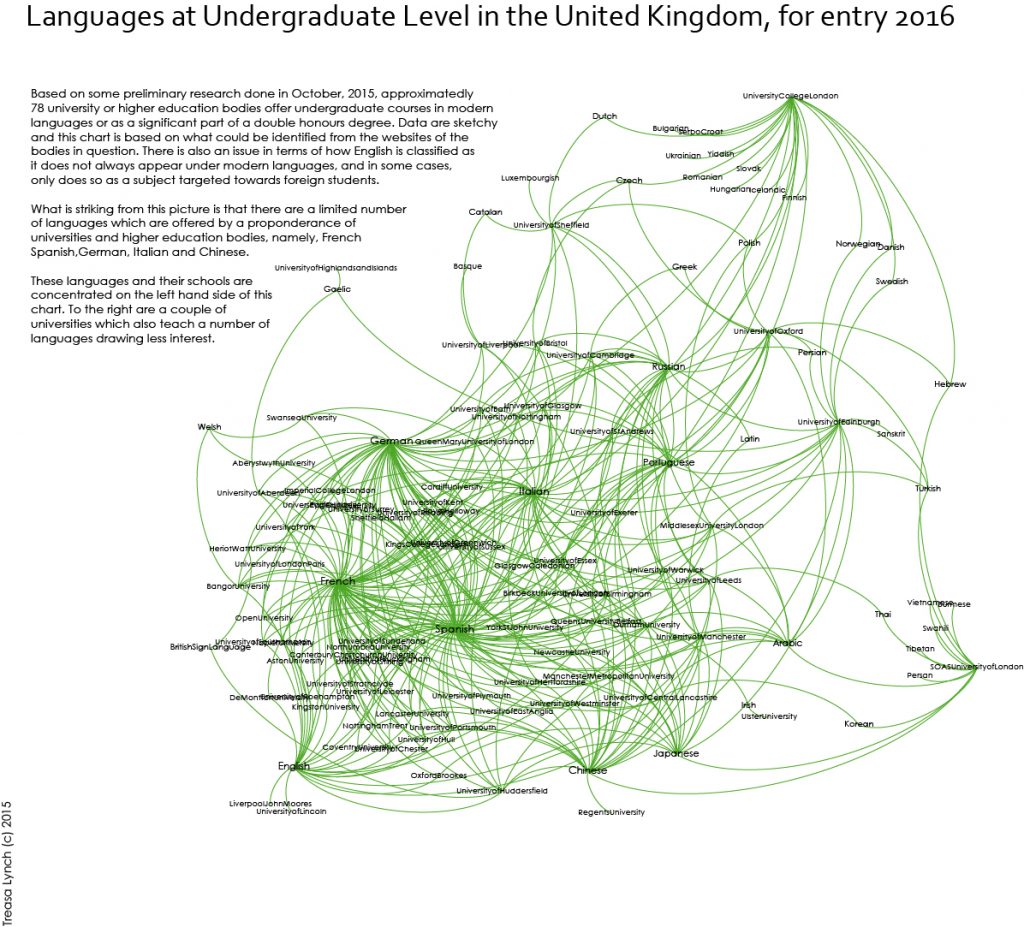

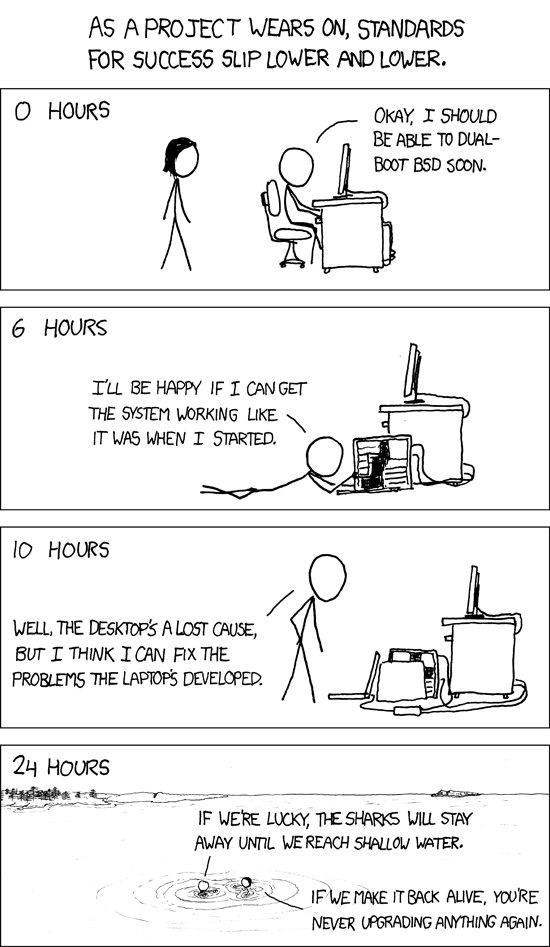

All of this devalues the human element in providing language skills. The technology sector is expecting it for free, and it is getting it for free, probably from people who should not be doing it either. This has an interesting impact on the ability of professionals to charge for work. This is not a new story. Automated mass production processes did it to the craft sector too. What generally happens is we reach a zone where “good enough” is a moveable feast, and it generally moves downwards. This is a cultural feature of the technology sector:

The technology sector has a concept called “minimum viable product”. This should tell you all you need to know about what the technology sector considers as success.

But – and there is always a but – the problem is not what machine translation can achieve – but what people think it achieves. I have school teacher friends who are worn out from telling their students that running their essays through Google Translate is not going to provide them with a viable essay. Why pay for humans to do work which costs a lot of money when we can a) get it for free or b) a lot less from via machine translation.

This is the atmosphere in which interpreters, and translators, and foreign language teachers, are trying to ply their profession. It is undervalued because a lower quality product which supplies “enough” for most people is freely and easily available. And most people are not qualified to assess quality in terms of content, so they assess on price. At this point, I want to mention Dunning-Kruger because it affects a lot of things. When MH370 went missing, people who work in aviation comms technology tried in vain to explain that just because you had a GPS on your phone, didn’t mean that MH370 should be locatable in a place which didn’t have any cell towers. Call it a little knowledge is a dangerous thing.

Most people are not aware of how limited their knowledge is. This is nothing new. English as She is Spoke is a classic example dating from the 19th century.

I know well who I have to make.

My general experience, however, is that people monumentally over estimate their foreign language skills and you don’t have to be trying to flog an English language phrasebook in Portugal in the late 19th century to find them…

All that aside, though, interpreting services, and those of most professions, have a serious, serious image problem. They are an innate upfront cost. Somewhere on the web, there is advice for people in the technology sector which points out, absolutely correctly, that information technology is generally seen as a cost, and that if you are working in an area perceived to be a cost to the business, your career prospects are less obvious than those who work in an area perceived to be a revenue generating section of the business. This might explain why marketing is paid more than support, for example.

Interpreting and translation are generally perceived as a cost. It’s hard to respect people whose services you resent paying for and this, for example, probably explains the grief with court interpreting services in the UK, why teachers and health sector salaries are being stamped on while MPs are getting attractive salary improvements. I could go on but those are useful public examples.

For years, interpreting has leaned on an image of discretion, a silent service which is most successful if it is invisible. I suspect that for years, that worked because of the nature of people who typically used interpreting services. The world changes, however. I am not sure what the answer is although as an industry, interpreting needs to focus on the value add it brings and why the upfront cost of interpreting is less than the overall cost of pretending the service is not necessary.